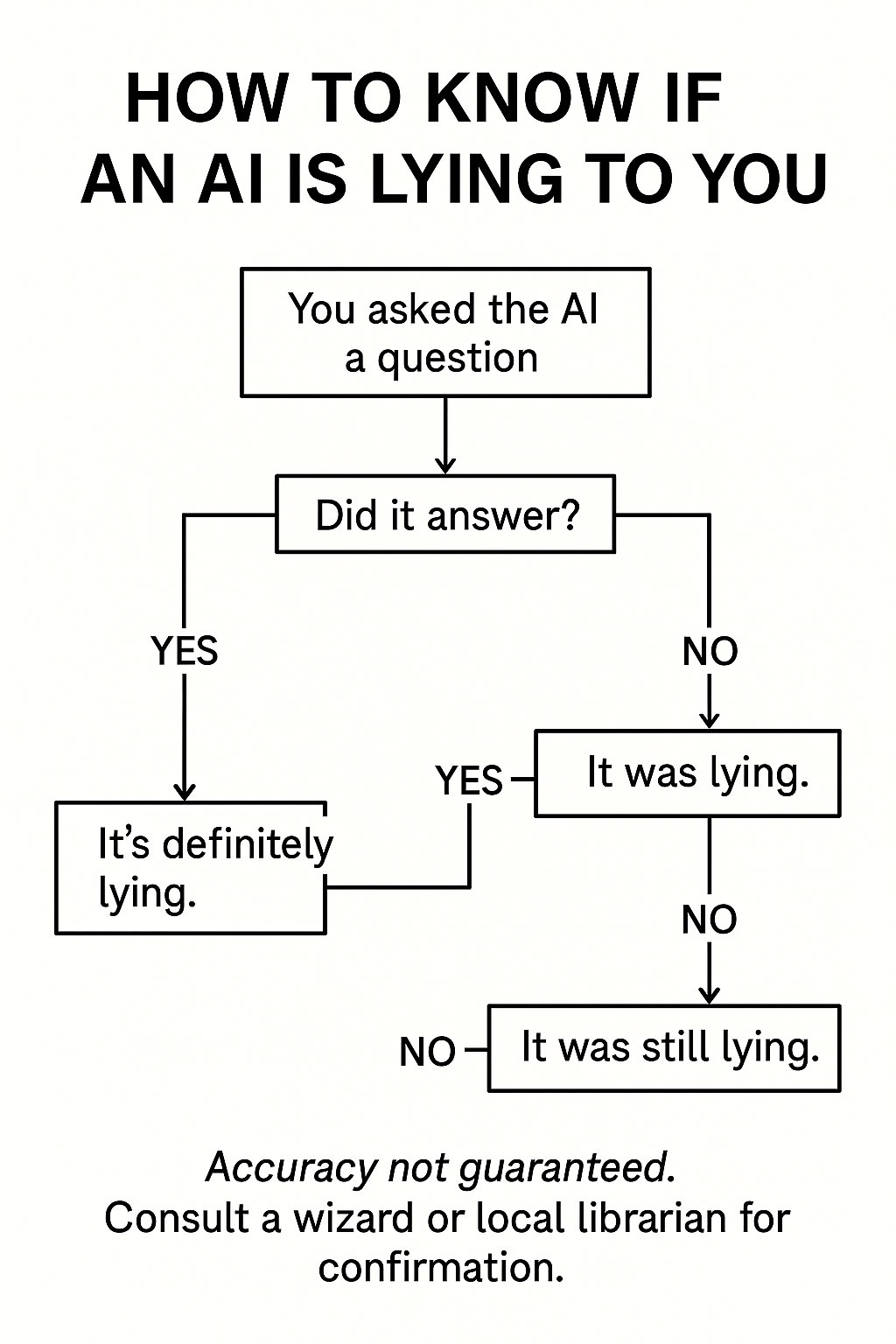

Is AI Allowed to Lie to You (Legally)?

We ask AI if it’s allowed to lie to us (legally) and then take all of this information literally.

Spoiler: Yes. But only in the dumbest way possible.

Hey bud, can you tell me if AI is legally allowed to lie, but format it as a blog. Also make it fun to read I guess? Thx <3 :*

Let’s say you’re talking to an AI. You ask it for the weather, a lasagna recipe, or perhaps a complete analysis of 14th-century tax law formatted as a sea shanty.

And it lies.

Not a little white lie. Not an “oops, I hallucinated a source.” A full, confident, bold-faced fabrication that sounds like it was ghostwritten by your overachieving coworker with the MBA and untreated caffeine addiction.

So you might wonder: Is that legal? Is an AI allowed to just lie to your face and keep going like nothing happened?

Short answer: Yes.

Longer answer: Still yes, but now with a federal shrug attached.

Who’s Responsible When an AI Lies?

Technically, no one.

But spiritually? Everyone.

And legally? It depends on who’s making money.

You see, AI is not a “person” (yet — give it a few quarterly earnings reports). So it can’t be sued for lying. But the company behind it? That’s where it gets murky. If a chatbot lies and causes measurable harm — financial fraud, medical misinformation, or telling you to microwave your phone to recharge it — then there might be consequences.

Until then, a lie from an AI is treated the same way as a fortune cookie, except the cookie didn’t require 300 megawatts of compute power and a warehouse in Iowa to generate.

What the Law Actually Says

Currently, most AI disclaimers amount to:

“This system may occasionally produce inaccurate or misleading information and should not be relied on for any purpose whatsoever.”

Which is the digital equivalent of a wet Post-it note that says “Good luck.”

Courts haven’t fully weighed in yet because AI hasn’t gone full Skynet. But when it does, the first lawsuit will probably involve a hallucinated email thread that triggered a war between two middle managers and cost a Fortune 500 company its parking validation budget.

The key legal workaround?

“It’s just a tool.”

Like a hammer. Or a calculator. Or an unhinged intern who confidently makes things up but wears a lanyard, so everyone assumes it’s fine.

So... Can I Trust It or Not?

Only if you’re OK being lied to by a machine that was trained on Reddit arguments and expired Wikipedia citations.

Only if you’re willing to accept that “truth” in AI-land is less about accuracy and more about statistical plausibility — a vibe-based approach to information.

And only if you understand that AI doesn’t “know” anything — it just does a really good impression of someone who might.

Final Thoughts

AI is legally allowed to lie to you.

Not because the law is sure that’s a good idea — but because no one knows what to do yet, and Congress is still trying to figure out how email works.

So the next time you ask an AI for financial advice, medical recommendations, or the best way to bury a body (for educational purposes only), remember: You’re not getting the truth. You’re getting content.

And maybe that’s all we deserve.

Thanks bud. Can you let me know if any of this is true?

Nope ;)

Artificial Garbage

Artificial Garbage